Top 7 Open-Source Tools for Prompt Engineering in 2025

Explore the top open-source tools for prompt engineering in 2025, enhancing AI model performance and streamlining development workflows.

Want to improve your AI application's performance? The right tools make all the difference. In 2025, prompt engineering is crucial for fine-tuning large language models (LLMs), and open-source tools are leading the way. Here’s a quick rundown of the top 7 tools and what they offer:

- Agenta: Simplifies prompt testing with version control and side-by-side LLM comparisons.

- LangChain: Modular framework for building complex workflows with reusable prompts and memory.

- PromptAppGPT: Low-code platform for fast prototyping and team collaboration.

- Prompt Engine: Focuses on reducing bias and improving prompt precision with real-time feedback.

- PromptLayer: Tracks and manages prompt versions with analytics for enterprise-scale use.

- OpenPrompt: Offers advanced template systems and evaluation tools for detailed prompt workflows.

- Latitude: Connects domain experts and engineers to build production-ready LLM solutions.

Quick Comparison

| Tool | Best For | Key Strengths | Learning Curve | LLM Support |

|---|---|---|---|---|

| Agenta | Versioning, testing prompts | Multi-LLM comparison, fast dev | Moderate | Multiple |

| LangChain | Modular workflows | Reusable templates, memory | Steep | GPT, LLaMA, Mistral |

| PromptAppGPT | Fast prototyping | Low-code, team collaboration | Low | GPT-3/4, DALL-E |

| Prompt Engine | Bias reduction, precision | Real-time feedback, analytics | Moderate | Multiple |

| PromptLayer | Enterprise-scale management | Version control, monitoring | Low | Multiple |

| OpenPrompt | Advanced workflows | Dynamic templates, evaluation | High | Multiple |

| Latitude | Production-ready solutions | Collaboration, scalability | Moderate | Multiple |

These tools streamline prompt engineering, from crafting reusable templates to managing large-scale applications. Pick the one that fits your team’s needs, technical expertise, and project goals.

1. Agenta

Agenta is an open-source LLMOps platform designed to simplify the process of creating, testing, and deploying language model applications. It offers a streamlined approach to prompt engineering, making it easier for developers and AI practitioners to work efficiently.

Key Features

Agenta includes a Prompt Playground that allows users to fine-tune and compare outputs from over 50 LLMs at the same time. It treats prompts like code, complete with version control, and provides tools for systematic evaluation and refinement using both automated metrics and human feedback.

Use Cases

Agenta is particularly useful in these scenarios:

- RAG Applications: Enhances workflows by integrating language models with external data for precise results [4].

- Enterprise Solutions: Supports large-scale applications with customizable workflows and quick API deployment.

- Collaborative Development: Facilitates teamwork between developers and domain experts using both UI and code-based tools.

Advantages

Agenta brings several benefits to prompt engineering workflows:

| Advantage | Details |

|---|---|

| Fast Development | Build and launch LLM applications in just a minute using pre-built templates. |

| Flexible Hosting | Offers both cloud-based and self-hosted options to meet security needs. |

| Side-by-side Testing | Compare and optimize prompts and models directly. |

| Version Tracking | Manage changes and maintain multiple prompt versions throughout development. |

Agenta's integrated approach reduces development time while ensuring high-quality outputs. Its support for both text and chat prompts, along with seamless integration into existing codebases via the Agenta SDK, makes it a strong choice for teams working on AI-driven projects.

While Agenta covers the entire prompt engineering process, other tools may specialize in specific areas, such as modular workflows or simplified integrations.

2. LangChain

LangChain is an open-source framework designed to help developers create advanced applications powered by large language models (LLMs). It offers a set of tools tailored for building complex AI systems, especially through advanced prompt engineering techniques.

Key Features

LangChain is built around four core components, each addressing common challenges in prompt engineering, like maintaining context and ensuring consistent results:

| Component | Purpose |

|---|---|

| PromptTemplate | Create reusable prompts with variable inputs |

| Memory | Maintain context across multiple interactions |

| Agents | Automate multi-step or complex tasks |

| Chains | Combine components for intricate workflows |

Use Cases

LangChain shines in scenarios requiring precise and adaptable prompt engineering, including:

- Conversational AI Systems: Develop chatbots that can handle multi-turn conversations while keeping context intact.

- Document Processing: Build workflows to analyze and extract data from various types of documents.

- Custom AI Agents: Design agents capable of executing multi-step tasks based on user inputs.

Advantages

LangChain offers several features that make it a go-to choice for developers working on LLM-based projects:

- Easy Integration: Works smoothly with popular LLMs like GPT, LLaMA, and Mistral, offering ready-to-use components that save time [1].

- Modular Design: Promotes consistent and organized prompt creation through reusable templates and components.

- Streamlined Development: Speeds up the development process with pre-built tools and well-structured workflows.

LangChain’s modular approach makes it especially suited for large-scale AI projects. Its open-source nature, coupled with an active developer community, ensures regular updates and detailed documentation, making it accessible to developers of all experience levels.

While LangChain is great for building modular workflows, tools like PromptAppGPT focus more on simplifying integrations and enhancing usability.

3. PromptAppGPT

PromptAppGPT offers a low-code platform designed to simplify AI application development, specifically for GPT-3/4 and DALL-E. It stands out by making the process accessible to both technical and non-technical users, encouraging collaboration in crafting effective prompts.

Key Features

| Feature | Description |

|---|---|

| Customized AI Interactions | Tools for creating advanced prompts that enhance engagement |

| Natural Language Processing | Delivers accurate responses and interprets input effectively |

| Multi-Language Support | Designed for global enterprises with diverse language needs |

| Security Framework | Ensures data protection and adheres to privacy regulations |

| Analytics Dashboard | Provides real-time insights into prompt performance and model accuracy |

Use Cases and Advantages

PromptAppGPT's low-code platform empowers users to create AI solutions without requiring extensive coding skills. Here's how it can be applied:

- Fast Prototyping: Quickly build and test AI applications with minimal effort.

- Enterprise Integration: Works seamlessly with existing systems, offering multi-language support and robust security.

- Customer Support: Develop smart chatbots and automation tools to enhance user experience.

- Team Collaboration: Perfect for teams that include both technical and non-technical members.

- Performance Monitoring: Use built-in analytics to refine and improve AI interactions over time.

The platform also supports scalable and efficient development through:

- Speed: Cuts down on development time and resource needs.

- Cost Savings: Reduces dependency on highly specialized developers.

- Scalability: Handles projects of varying sizes with ease.

- Continuous Improvement: Monitors AI performance to ensure quality.

With its user-friendly design and robust features, PromptAppGPT is a great option for teams that value quick deployment and ease of use. For those seeking more advanced customization and workflows, tools like Prompt Engine may be a better fit.

4. Prompt Engine

Prompt Engine focuses on solving a key challenge in prompt engineering: creating precise outputs while reducing bias.

Key Features

| Feature | Description |

|---|---|

| Real-time Feedback and Analytics | Provides instant analysis and actionable tips to improve prompt performance |

| Bias Detection | Spots potential biases in prompts and suggests corrections |

| Multi-model Support | Works with various language models for flexible use |

| Advanced NLP | Uses cutting-edge NLP techniques to enhance prompt understanding and output quality |

Use Cases

Prompt Engine is ideal for:

- Customer Service: Design consistent, context-aware response systems.

- Educational Content: Develop learning materials that adapt to students' needs.

- Virtual Assistants: Create AI assistants with natural, conversational interactions.

- Content Optimization: Fine-tune prompts for better accuracy and relevance.

Advantages

The tool’s user-friendly framework and real-time feedback reduce development time while ensuring high-quality AI outputs. This minimizes the need for extensive fixes after deployment. Its low-code interface makes it easy for both technical and non-technical team members to collaborate effectively, fitting seamlessly into existing workflows.

If your team needs tools for refining prompts, Prompt Engine offers a strong mix of accessibility and advanced features. It stands out with its focus on real-time adjustments and bias detection, while tools like PromptLayer specialize in managing and tracking prompt histories to improve overall workflows.

5. PromptLayer

PromptLayer is a specialized tool built for managing prompts and monitoring large language models (LLMs). It simplifies the process of creating, testing, and deploying prompts on a large scale.

Key Features

| Feature | Description |

|---|---|

| Visual Prompt Editor | A no-code interface that lets you edit and deploy prompts without needing engineers |

| Enterprise-Scale Version Control | Tracks and manages prompt versions with a detailed change history, ideal for large teams |

| Analytics Dashboard | Tracks usage patterns, performance metrics, and areas for improvement |

| Testing Tools | Includes built-in A/B testing and comparison features for evaluating prompts |

| Multi-Model Support | Works with different LLMs using a unified prompt template system |

How It Helps

PromptLayer is designed to help businesses improve efficiency, cut debugging time, and save engineering resources. Here’s how it stands out:

- Scaling Operations: It supports workflows for generating large amounts of content, managing enterprise-level customer service, and creating educational materials.

- Improving Workflows: The no-code interface speeds up iterations, lets non-technical users contribute to prompt design, and provides detailed analytics for tracking costs, performance, and latency.

- Boosting Team Collaboration: It ensures smooth teamwork between technical and non-technical members, offers structured version control for safer updates, and provides monitoring tools for feedback.

Here’s what one industry professional had to say:

"We iterate on prompts 10s of times every single day. It would be impossible to do this in a SAFE way without PromptLayer."

PromptLayer shines in managing large-scale prompt workflows, complementing tools like LangChain and Agenta with its focus on monitoring and team collaboration. While PromptLayer specializes in observability and scaling, tools like OpenPrompt cater to modular and flexible prompt engineering needs.

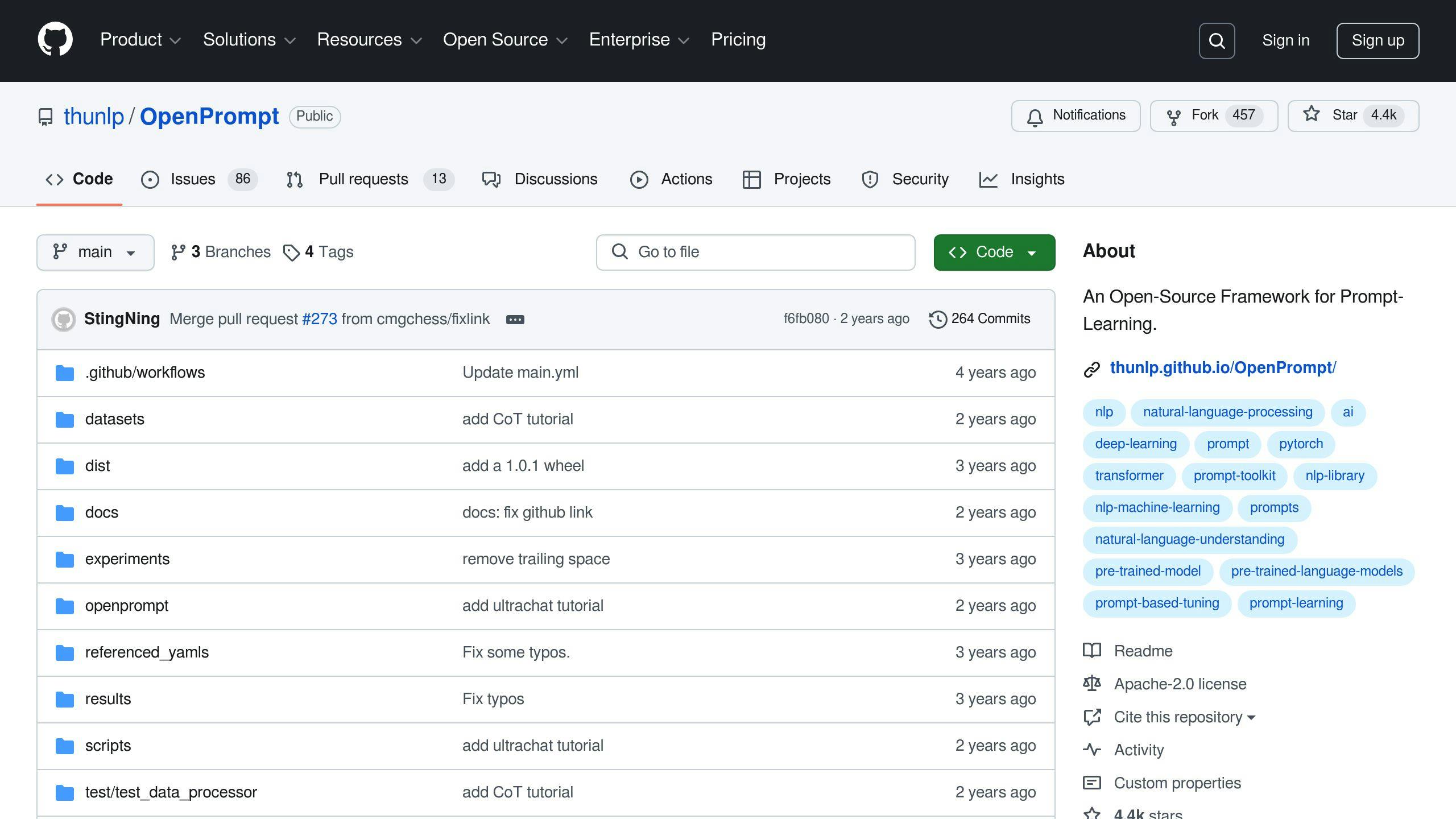

6. OpenPrompt

OpenPrompt stands out as an open-source framework designed for advanced prompt engineering. Its modular setup allows for detailed control over workflows, setting it apart from tools like PromptLayer, which focus more on monitoring and scaling.

Key Features

| Feature | Description |

|---|---|

| Template System | A powerful template engine that supports dynamic variables and conditional logic |

| Multi-Model Integration | Seamlessly works with GPT-3/4 and Hugging Face models using unified APIs |

| Evaluation Framework | Offers metrics and tools to gauge how well prompts perform |

| Context Management | Handles context sensitivity and resolves ambiguity effectively |

| Template Library | Includes pre-designed templates tailored for common tasks |

Use Cases

OpenPrompt shines in a variety of specialized scenarios:

| Application | Implementation |

|---|---|

| Text Generation | Uses refined templates to produce consistent, high-quality outputs |

| Question Answering | Employs context-aware prompts for precise information retrieval |

| Conversational AI | Manages dialogues intelligently, including memory retention for better interactions |

| Model Evaluation | Provides a robust testing suite to assess prompt performance |

Advantages

| Advantage | Impact |

|---|---|

| Faster Development | Streamlined workflows enable quicker iterations of prompts |

| Cost Efficiency | Reduces computational expenses through better prompt management |

With its focus on flexibility and accuracy, OpenPrompt is particularly useful for teams tackling complex language model projects. It also comes with detailed documentation and an active community, making it easier for newcomers to get started.

While OpenPrompt is built for precision and modularity in advanced workflows, the next tool, Latitude, takes a distinct path, emphasizing creative uses in prompt engineering.

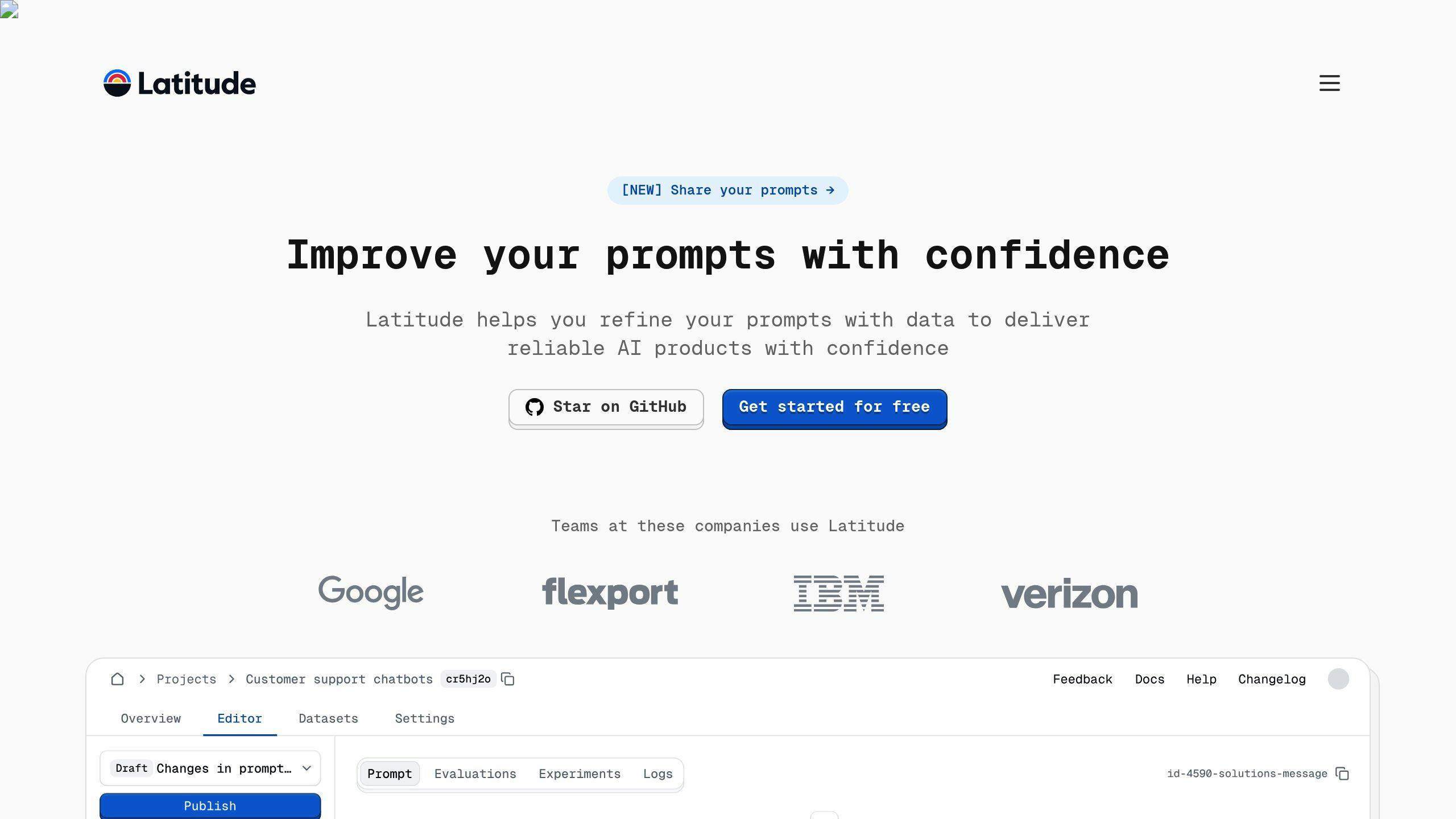

7. Latitude

OpenPrompt is great for modular workflows, but Latitude focuses on connecting domain experts and engineers to create production-ready LLM solutions.

Key Features

| Feature | Description |

|---|---|

| Dynamic Templates | Use templates with variables to handle complex scenarios. |

| Collaborative Interface | A workspace for domain experts and engineers to collaborate effectively. |

| Production Integration | Deploy enterprise-grade LLM applications directly. |

| Modular Architecture | A customizable framework to support various integrations. |

| Evaluation Tools | Tools for testing prompts, measuring performance, and refining outputs. |

Use Cases

Latitude is a strong choice for enterprise environments, especially for:

- Developing and maintaining production-grade LLMs.

- Managing complex, multi-step workflows.

- Aligning domain expertise with engineering needs.

- Integrating with enterprise systems.

The platform helps cut down development time, improves teamwork across departments, ensures quality through rigorous testing, and scales easily from prototypes to full production. Its structured approach to prompt design is particularly helpful for organizations deploying AI solutions on a large scale.

Latitude also integrates smoothly with popular LLMs and existing AI frameworks, making it a practical tool for teams handling complex AI projects. Its features support scalable and efficient AI development.

As an open-source tool, Latitude benefits from an active community that continually improves its functionality, making it a dependable choice for teams looking to stay ahead in AI innovation.

Comparison Table

Here's a breakdown of seven open-source tools and their features:

| Feature/Tool | Agenta | LangChain | PromptAppGPT | Prompt Engine | PromptLayer | OpenPrompt | Latitude |

|---|---|---|---|---|---|---|---|

| Primary Focus | Integrated prompt tools | Framework for LLM apps | Low-code development | Prompt design | Prompt management | Modular workflows | Enterprise collaboration |

| Key Strengths | Versioning, evaluation | Multi-LLM support | Quick application builds | Template management | Analytics and monitoring | Research-oriented | Production-ready tools |

| Technical Needs | Python | Python, JavaScript | Minimal coding | Python | REST API | Python | Customizable framework |

| Best For | Advanced prompt design | Dynamic applications | Fast prototyping | Template-based dev | Enterprise monitoring | Academic research | Cross-team collaboration |

| LLM Support | Multiple | GPT, LLaMA, Mistral | GPT-3/4, DALL-E | Multiple | Multiple | Multiple | Multiple |

| Learning Curve | Moderate | Steep | Low | Moderate | Low | High | Moderate |

| Integration & Scale | High, seamless | High, extensive | Moderate | Moderate | High, advanced features | Moderate | High, large-scale ready |

Highlights by Tool

- LangChain: Offers robust integration and supports multiple LLMs, making it a great choice for complex applications [1][3].

- PromptAppGPT: Perfect for quick prototyping with its low-code approach [5][2].

- Latitude: Built for enterprise-level collaboration, especially between domain experts and engineering teams.

Factors to Consider When Choosing

When deciding on the right tool, focus on these aspects:

- The technical expertise of your team

- Scale and complexity of your project

- How well it integrates with your existing systems

- Requirements for monitoring and analytics

Match the tool's strengths to your specific project's needs to ensure the best fit.

Conclusion

Open-source tools in 2025 have brought advanced options for prompt engineering, making life easier for developers and AI practitioners.

LangChain's PromptTemplate and Memory features have reshaped how prompts are designed and fine-tuned [1][3]. Agenta helps tackle version control and evaluation issues, while Latitude bridges the gap between domain experts and engineers, enabling production-ready applications.

Choosing the right tool depends on your project’s specific needs. Here are some factors to think about:

- Technical Requirements: Does the tool match your team's expertise and project complexity?

- Scalability: Can it grow with your project over time?

- Integration: How well does it fit into your existing systems?

- Monitoring: Does it offer the level of analytics and tracking you need?

Each tool brings something different to the table. LangChain is great for flexible frameworks [1][3], while PromptAppGPT offers a simple way for teams new to prompt engineering to get started [5].

The key to success lies in understanding your use case and picking tools that align with your goals. These open-source solutions have changed the way we optimize language models, making prompt engineering more efficient and effective in 2025. The right mix of technical features and real-world usability will determine how well these tools serve your AI projects.

FAQs

Are LLMs free?

Open-source LLMs might not come with a price tag, but running them isn't free. The real expense comes from the computational power and infrastructure needed to operate them, especially for large-scale use cases [1][3]. Key cost drivers include:

- Infrastructure: Paying for cloud services or maintaining high-performance local setups.

- Scalability: Costs increase as your application grows in size and complexity.

- Managing Costs:

- Streamline model architectures for efficiency.

- Use cost-effective cloud service tiers.

- Implement smart resource management techniques.

Platforms like Google Cloud and AWS SageMaker offer various pricing options to match different needs. Additionally, tools such as PromptLayer and LangChain can help you monitor usage and manage prompts efficiently, which may reduce operational expenses.

Grasping these cost factors is crucial when choosing tools for prompt engineering, as they directly influence scalability and implementation strategies.