Prompt Versioning: Best Practices

Learn best practices for prompt versioning to enhance AI collaboration, ensure clarity, and streamline recovery processes.

Prompt versioning helps you manage and track changes to AI prompts, just like software version control. This ensures quality, simplifies troubleshooting, and improves team collaboration. Here's the key to getting started:

- Use Semantic Versioning (X.Y.Z): Track major, minor, and patch updates (e.g., v1.0.0 to v1.1.0).

- Keep Prompts Clear: Include precise instructions, examples, and limitations for better LLM responses.

- Document Changes: Log updates, monitor performance, and control access to avoid errors.

- Prepare for Rollbacks: Use tools like feature flags and checkpoints to quickly revert faulty updates.

Tools like Latitude, Lilypad, and LangSmith simplify prompt management, versioning, and recovery. Start by assessing your current process, selecting the right tools, and setting up workflows for consistent and reliable results.

Best Practices for Managing Prompt Versions

Using Version Control for Prompts

Managing prompt versions is similar to handling software updates but tailored for interactions with large language models (LLMs). The goal is to systematically track and manage changes to prompts over time [1].

A good approach is to use semantic versioning (SemVer), which relies on a three-part version number (X.Y.Z):

| Version Component | Purpose | Example Change |

|---|---|---|

| Major (X) | For changes that alter the prompt's structure significantly | Overhauling the prompt framework |

| Minor (Y) | For adding new features or context parameters | Introducing additional context |

| Patch (Z) | For small fixes like correcting typos or minor tweaks | Fixing grammar issues |

While version control helps organize prompt updates, keeping the design clear and structured is just as important for reliable performance.

Ensuring Clear Prompt Contexts

A well-defined prompt context is crucial for guiding LLMs to deliver consistent and accurate results. Prompt engineering revolves around creating inputs that are both specific and actionable [2].

To achieve this, include:

- Precise instructions: Clearly state what the LLM should do.

- Relevant examples: Provide examples that clarify expectations.

- Defined limitations: Outline what the LLM should avoid or focus on.

Once the prompt is clear, versioning becomes easier, ensuring every update serves a clear purpose.

Tracking Changes in Prompts

Tracking changes effectively requires a mix of manual documentation and automated tools.

Key elements to focus on include:

- Documentation: Record why changes were made and what they aim to achieve.

- Performance Metrics: Monitor how changes affect user satisfaction and overall output quality.

- Access Control: Define who can modify or deploy prompts to maintain consistency.

For enterprise settings, it's important to allow multiple teams or stakeholders to test and deploy prompts without disrupting core systems [3]. This ensures flexibility while maintaining control.

Strategies for Rollback and Recovery

Implementing Rollback Techniques

Having effective rollback methods in place is crucial for maintaining system stability and keeping operations running smoothly. Automated tools are key here - they help revert to earlier, stable versions when something goes wrong [4].

A well-designed rollback system often uses feature flags and blue/green deployments to handle version changes efficiently. These tools allow teams to:

- Test new versions alongside existing ones.

- Instantly switch back if issues arise.

- Reduce downtime during updates.

- Monitor system performance during transitions.

| Rollback Component | Purpose | How It Works |

|---|---|---|

| Feature Flags | Manage version activation | Enable or disable specific versions without redeploying code. |

| Blue/Green Deployment | Seamless version switching | Run two environments - one for the stable version and one for testing - allowing instant transitions. |

| Health Monitoring | Track system performance | Keep an eye on fault rates, latency, and resource usage. |

These rollback tools are essential for keeping things stable during updates, but they work best when paired with checkpointing to save and recover detailed system states.

Using Checkpoints for Prompt States

Checkpointing helps maintain a history of prompt versions and makes recovery faster. This involves automatically saving system states at key moments, like after major updates or performance tweaks [1].

When creating checkpoints, monitor these metrics:

- CPU and memory usage.

- Response times and latency.

- Error rates.

- User satisfaction scores.

Platforms like Latitude show how collaborative tools can simplify checkpointing. They help teams track version histories and recover quickly when needed.

Recovering Prompt Changes

Recovery plans should be clear, automated, and well-documented to minimize disruptions. Here’s what to focus on:

- Recovery Procedures: Use automated tools to detect issues and rely on version histories to track changes, measure performance impacts, and identify affected systems.

| Component | Details to Track |

|---|---|

| Change Description | What was modified? |

| Performance Impact | Metrics before vs. after changes. |

| Dependencies | Systems or tools affected by the change. |

| Recovery Points | Available checkpoints for reverting to earlier versions. |

- Testing Protocol: Regularly run recovery drills to ensure the system works as expected and to identify any weak spots [4].

"Data and metrics such as fault rates, latency, CPU usage, memory usage, disk usage, and log errors can be used to inform rollback decisions. Key indicators should include performance metrics, user satisfaction, and system health, which can be monitored to identify potential issues and trigger rollbacks when necessary" [4][5].

Prompt Management 101

Tools for Managing Prompt Versions

Once you have rollback and recovery systems in place, the next step is finding the right tools to manage your prompt versions. Thankfully, there are several platforms tailored to the needs of LLM development teams.

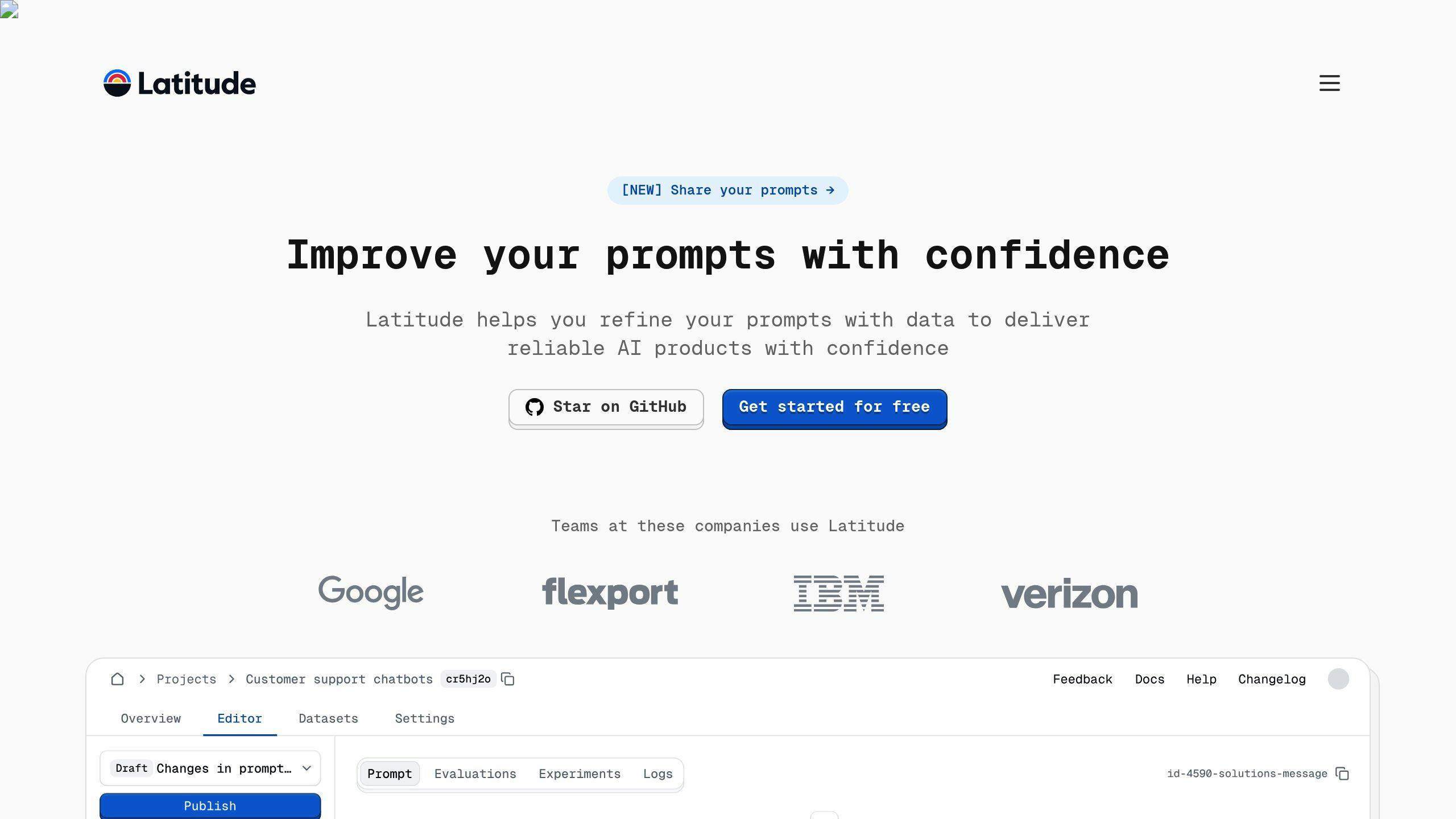

Latitude: Open-Source Platform for Prompt Engineering

Latitude is an open-source platform designed to help prompt engineering teams collaborate effectively. It bridges the gap between domain experts and engineers, enabling them to create and maintain production-level LLM features. Its open design and integration capabilities make it a great fit for teams focused on building collaborative workflows.

Other Tools for Prompt Management

Here are a few other tools that specialize in different aspects of prompt versioning:

-

Lilypad: This tool simplifies LLM versioning and tracks dependencies. Key features include:

- Automated tracking of LLM interactions

- Dependency tracing

- Built-in rollback functionality

- Performance monitoring tools

-

LangSmith: Built to work seamlessly with the LangChain ecosystem, LangSmith offers:

- Centralized prompt versioning

- Metadata-based change tracking

- Performance analysis

- Integration with existing LLM setups

These tools are designed to support efficient version control, team collaboration, and recovery processes. By using them, teams can streamline their workflows and maintain consistent productivity.

Conclusion and Key Points

Key Takeaways

Tools like Latitude make managing prompt engineering tasks easier by supporting collaboration and efficient version control. Having strong rollback strategies is critical for maintaining stable systems and managing prompt changes effectively. These practices provide a solid framework for building a reliable prompt versioning system into your workflow.

Steps to Get Started

To improve collaboration and version tracking, consider integrating a centralized prompt repository into your development process.

Here’s a simple framework to guide implementation:

| Phase | Action Items | Expected Outcomes |

|---|---|---|

| Assessment | Review current practices and identify gaps | Pinpoint areas needing improvement in versioning and documentation |

| Tool Selection | Pick suitable versioning platforms | Choose tools that align with your team’s needs and infrastructure |

| Implementation | Set up version control and monitoring systems | Create automated workflows for version control and deployment |

| Optimization | Regularly assess and refine processes | Enhance prompt consistency and system reliability |

Keep an eye on rollback success rates and prompt consistency metrics to measure how well the system performs. Ongoing monitoring and updates will ensure your prompt management process continues to improve.