I Made a Free Tool to Know My Stargazers 🌟

I made a free tool to learn what’s going on with our stargazers.

I have been thinking about the people supporting our repo lately. Every new stargazer means a lot to us, as it validates that we are building the right thing.

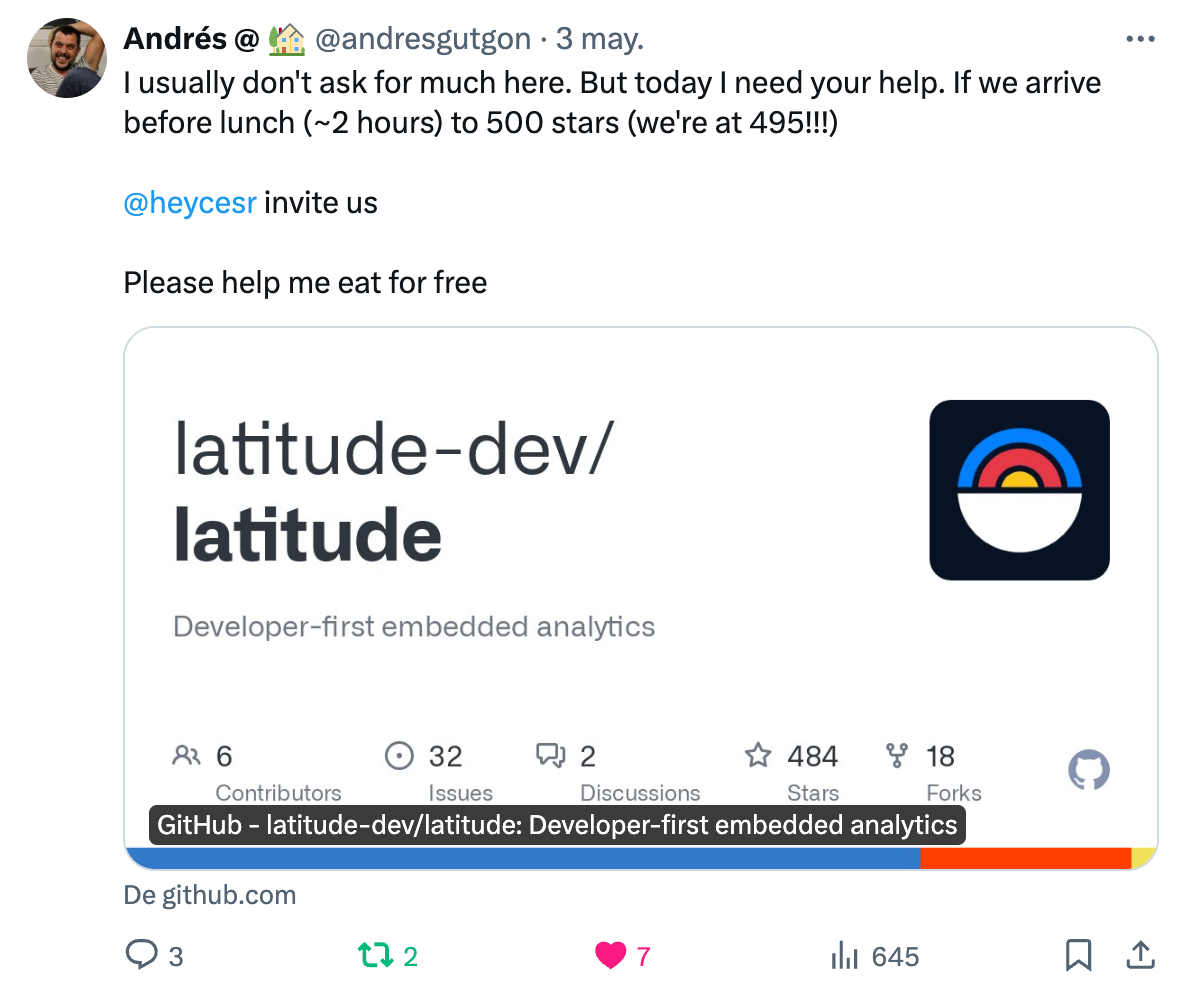

And sometimes, it means even more…

Jokes aside, during the last few weeks, we’ve seen our “star history” go up. In less than a couple of months, we made it to 500 stars on GitHub, which is a positive sign.

We’re quite new in this space, but all the research we’ve done suggests that number of stars is a good proxy for product interest and usage. So we optimized for that and it’s working.

But I still felt we were missing something. Even though we are getting more stars every day, we know little to nothing about our stargazers:

- Who they are?

- What do they work on?

- What other repos are interesting for them?

- Who are the people they follow?

- Is there any relevant company interested in our product?

There were a lot of questions popping up in my mind, so I started to think that other people might be in the same scenario. If we manage to know all of this stuff, then we can help to solve more problems for them.

I took a look into GitHub and I saw I could get a lot of quantitative info, but the process was very manual. Then, I searched for existing repos to solve this, but didn’t fit my needs. Most of them were very old and I couldn’t make it work.

That’s why I decided to build my own solution.

Here you can see a live demo: https://repo-analysis-new-alfred.latitude.page/

And here you have the link to the repo: https://github.com/latitude-dev/stargazers-analysis

I’ll try to explain step by step how I made it, so you can do the same. Hope this helps!

The GitHub API

First thing to figure out. The GitHub API allows us to check a lot of information from a repo that, when aggregated, could give us really good insights. Here’s what I needed: a flexible dataset to make data explorations, aggregations, mix data and share it with my peers at Latitude.

At Latitude we have a way to explore and expose the data. The easiest way is using our DuckDB connector and .csv files. I only was missing the .csv files.

These are the steps I followed later:

1. ChatGPT to create a Python code with no knowledge

I thought that I could create a small script to get the info from the GitHub API. The API seemed simple and although I like to code, I’m a product designer so I needed some help.

I asked to ChatGPT for a script that:

- Uses the GraphQL API instead of the REST one because allows more requests by minute (2,000 vs 900)

- Uses 2 workers to speed up the process. We could use more but it’s not recommended by GitHub because hit the API limits too quickly

- Manage retries when we hit the GitHub GraphQL API limits. The amount of data is huge and the API limitations make the process automatic but long. In my case, 500 stargazers took ~2 hours

- Do not take into account the users with more than 5k repos starred. We want quantitative data aggregated to detect common patterns so people with 40k repos starred wouldn’t give as much more value in exchange for all the requests needed

And the data required:

- Users info from Latitude’s stargazers

- Other repositories those users have starred

- The people those users follow

So this way once the script is finished, we have user-details.csv, organizations.csv, following.csv, and repos-starred.csv.

This has been the first time I feel the power of ChatGPT with no bullshit. It wasn't super easy, because of the way I learned about the API limitations, but the summary is I got exactly what I needed. Here’s the code.

2. Run the script and get the .csv files

Once the script is in place, let’s see how it works. In the repo you can download everything that you need. The steps to run the script are the following:

Initial requirements

- Before starting, make sure you have Python installed. You can check the official page here: https://www.python.org/downloads/

- Generate a GitHub Access Token to authenticate you and the GitHub API later. You can generate it from https://github.com/settings/tokens

- To start developing in this project, first ensure you have Node.js >= 18 installed. Then, install the Latitude CLI:

npm install -g @latitude-data/cli

In the file fetch_stargazers_data.py replace the repo owner and repo name in lines 14 and 15 with the info of the repo you want to analyze. For example, for github.com/latitude-dev/latitude/ would be like this:

14 REPO_OWNER = 'latitude-dev'

15 REPO_NAME = 'latitude'

Running the script

- Clone the repo

- Open the terminal and go to the root of the repo cloned.

- Run

pip install requests - Then, run

export GITHUB_TOKEN='YOUR_TOKEN_HERE’. Replace YOUR_TOKEN_HERE with the token generated before. - And finally, run

python3 fetch_stargazers.py - Important — the script could take a long time to finish. ~500 stargazers took us ~2 hours for reference.

When is finished, it will save 4 .csv files inside the queries folder which is where the sources must be to analyze the data with Latitude. You can open the .csv files to check the data is there.

3. Exploring the data with Latitude

At this point, we have the data ready, but we want to visualize it. The cloned repo is prepared to take your .csv files, using Latitude and DuckDB, and build a data visualization app.

- In the queries folder, there are the data explorations using just SQL

- In the views folder, there is the frontend for the data — using Latitude components, Svelte and HTML

You can modify any .sql query or .html view to adapt it to your needs. You can follow the Latitude docs.

The project is divided into different sections:

- Overview - Top 5 of common repos, following users, organizations, and your stargazers with more followers. Note that the common lists take into account how many stargazers of your repo have starred the same other repo, followed the same other user… Also, this page shows some analysis of your stargazers at the bottom.

- Users list - The entire list of stargazers of your repo with their info and filters.

- Repos - The entire list of the common repos starred by your stargazers.

- Following - The entire list of the users followed by your stargazers.

- Location - The entire list of the common locations your stargazers are based in.

- Organization - The entire list of the common organizations your stargazers belong to.

- Company - The entire list of the companies your stargazers work for.

To see the app with your project run latitude dev from the terminal at the root of the project.

4. Sharing with the team

Until now, the project is running locally on your machine, but probably you want to share the info with your team. You have 2 options:

- Deploy on your own. You can follow the ****docs here.

- Just run

latitude deployand we’ll manage to deploy it in our cloud giving you a URL to share it easily. You can follow the docs here.

I believe this tool is really valuable for everyone who wants to know more about their GitHub repo and how to serve better their users and stargazers. Also, being an open-source solution is something that everybody can access and I feel it’s a good way to give back to the community that is supporting Latitude.

And that’s it. I hope you find value in what I built :)

I’d love to get your feedback in the comments, let me know if you have any questions.