Collaborative Prompt Engineering: Best Tools and Methods

Explore collaborative prompt engineering to enhance AI model prompts through teamwork, effective tools, and best practices for improved outcomes.

Collaborative prompt engineering is about teams working together to create better prompts for AI models. It combines diverse expertise, structured workflows, and the right tools to improve prompt quality, reduce bias, and speed up iterations. Here's what you need to know:

- Why It Matters: Collaboration leads to better prompts by leveraging team feedback, diverse perspectives, and peer reviews.

- Challenges: Teams often face issues like version control, asynchronous workflows, and slow testing processes.

- Key Tools:

- PromptLayer: Tracks prompt changes and performance.

- OpenAI Playground: Tests and refines prompts instantly.

- LangSmith: Manages prompt versions and archives iterations.

- Latitude: Offers real-time analysis and collaboration tools.

- Shared Platforms: Google Docs and Notion for brainstorming and documentation.

- Best Practices: Plan workflows, use dedicated communication channels, and prioritize testing with tools like Helicone.ai for real-world feedback.

Prompt Engineering Tutorial – Master ChatGPT and LLM Responses

Tools for Collaborative Prompt Engineering

These tools help address challenges like version control, team collaboration, and testing delays, making workflows smoother and more effective.

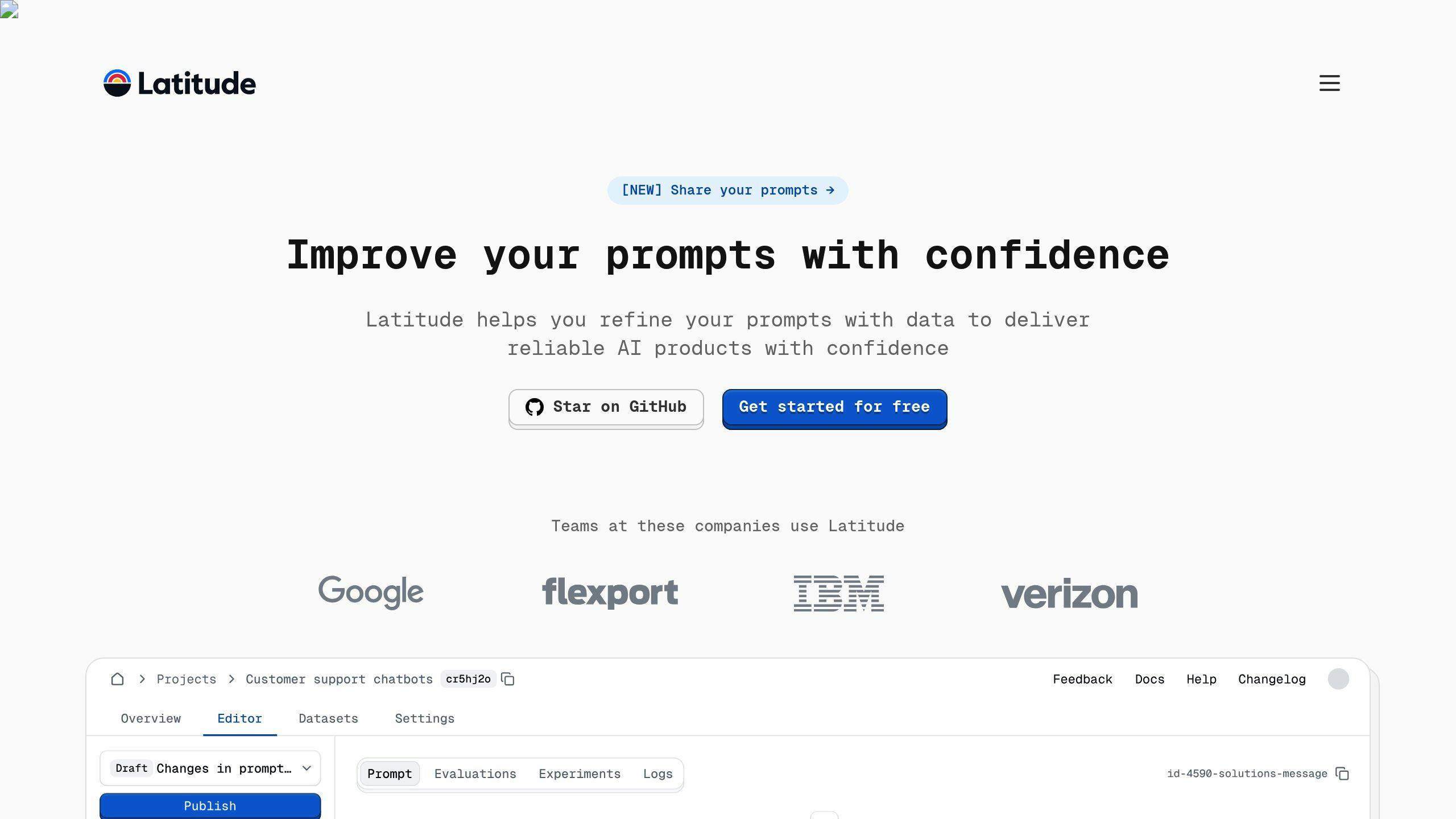

Latitude

Latitude offers open-source tools designed for version control and real-time performance analysis. It helps domain experts and engineers collaborate effectively.

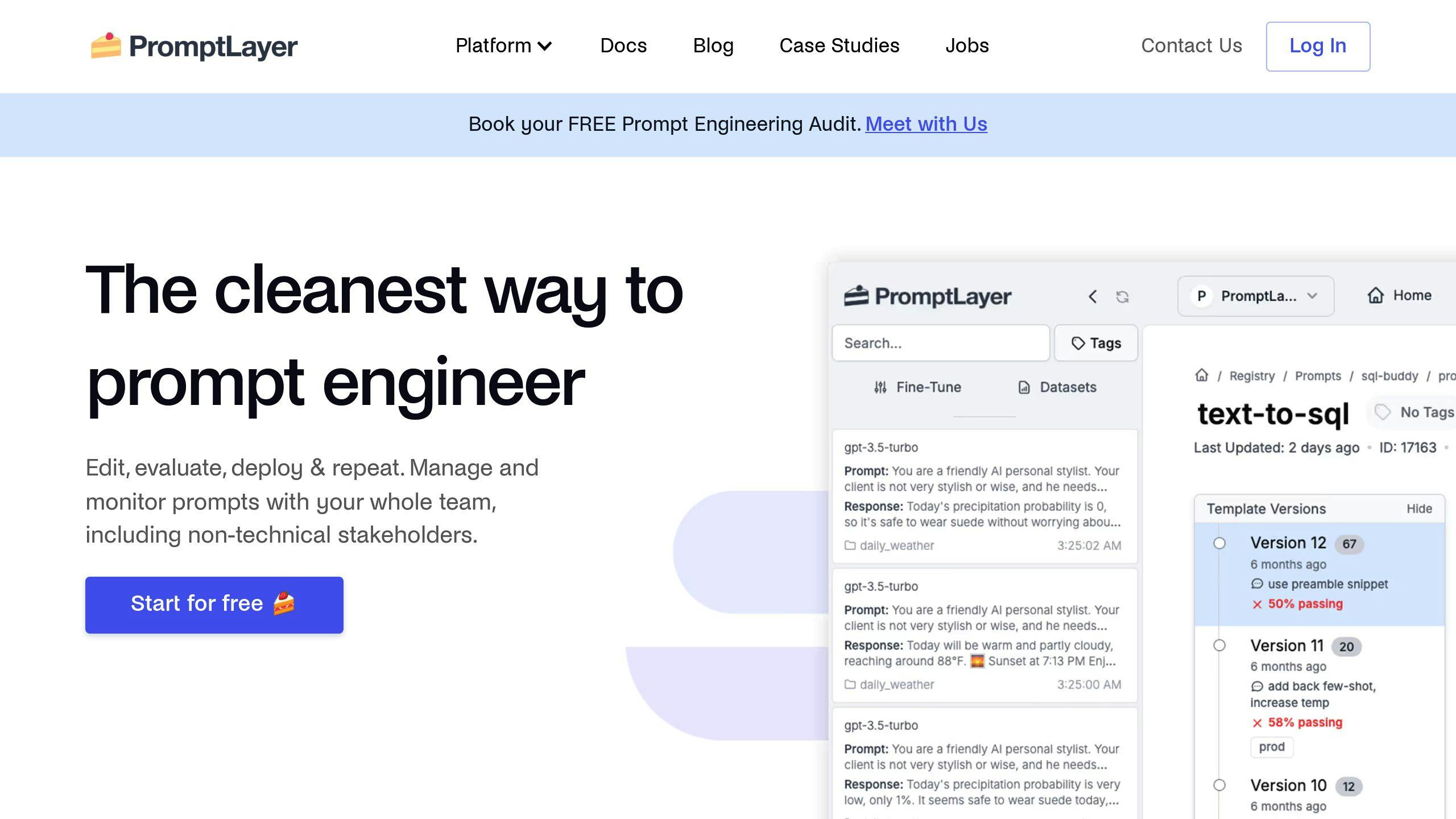

PromptLayer

PromptLayer simplifies version tracking with unique identifiers for each prompt version. This makes it easier to:

- Track changes systematically

- Keep detailed documentation

- Maintain a clear version history

- Assess prompt performance over time

Shared Editing Platforms

Collaborative platforms like Google Docs and Notion are invaluable for prompt engineering teams, especially during brainstorming and quick iterations.

| Platform | Key Collaboration Features |

|---|---|

| Google Docs | Real-time editing, comments, and version history |

| Notion | Knowledge base creation, templates, and shared workspaces |

OpenAI Playground

OpenAI Playground is a hands-on tool for testing and refining prompts. Teams can:

- Test how prompts perform instantly

- See how AI reacts to different structures

- Share results with teammates

- Adjust prompts based on real outputs

For even better management and tracking, combine OpenAI Playground with tools like LangSmith [4].

Using these tools, teams can streamline their workflows and focus on improving their prompt engineering strategies.

Best Practices for Team-Based Prompt Engineering

Communication and Workflow Planning

Clear communication and organized workflows are essential for successful prompt engineering. Tools like Slack and Microsoft Teams help teams engage in real-time discussions and share feedback quickly. To streamline collaboration, teams can set up dedicated channels for specific prompt engineering tasks:

| Channel Purpose | Key Activities & Benefits |

|---|---|

| Development & Ideation | Brainstorming ideas, technical discussions, and planning implementations |

| Performance Tracking | Analyzing metrics, sharing test results, and discussing optimization strategies |

Using collaborative platforms for centralized documentation ensures everyone works from the same source of information. This approach keeps decisions and justifications transparent and easily accessible.

Managing Prompt Versions and Iterations

Version control is critical for keeping prompt engineering efforts organized. Tools like PromptLayer help track changes systematically. To keep documentation effective, include:

- Clear descriptions of changes and their purpose

- Performance metrics and analysis of the impact

- Attribution to team members and timestamps for changes

LangSmith offers a centralized way to manage and archive prompt versions, making it easy to compare different iterations. After archiving, prompts should undergo thorough testing to fine-tune their performance.

Testing and Analyzing Prompt Performance

Testing is the cornerstone of refining prompts. A structured approach to testing includes:

- Creating and evaluating multiple prompt variations

- Measuring key metrics like accuracy, consistency, and user satisfaction

- Using performance data to refine prompts and repeating the testing process

For monitoring prompt performance in production, tools like Helicone.ai provide valuable feedback from real-world usage, supporting continuous improvement.

Advanced Techniques in Prompt Engineering

Fine-Tuning Prompts for Better Results

Gradient-based methods like RLHF (Reinforcement Learning from Human Feedback) allow for systematic improvement of prompts through repeated feedback and adjustments. Here's how teams can refine their prompts:

- Gather consistent human feedback to evaluate outputs.

- Monitor performance metrics throughout iterations.

- Adjust parameters based on measurable outcomes.

For teams working on multi-modal applications, additional challenges often require specialized solutions beyond traditional fine-tuning approaches.

Crafting Prompts for Multi-Modal Applications

Designing prompts for multi-modal applications - those combining text and visuals - requires careful planning. Tools like PromptPerfect make this process easier by allowing integration of both textual and visual elements. To achieve balanced results, teams should define key parameters such as:

- Image resolution and style: Ensure visual elements meet the desired quality and aesthetic.

- Textual tone and structure: Maintain consistency with the visual components.

- Technical specifications: Align both modes for compatibility and clarity.

Collaboration plays a key role in ensuring the text and visuals work together seamlessly, leading to better overall outcomes.

Decoding Prompts Through Reverse Engineering

Reverse engineering prompts means breaking down their components to see how each part influences the final output. This involves identifying patterns that work well and testing changes to improve results. Tools like LangSmith help simplify this process by analyzing prompt components and tracking their effects on outputs.

"By incorporating human feedback into the training loop, these models have demonstrated significant improvements in generating coherent and contextually appropriate responses", notes a recent OpenAI study on RLHF techniques [3].

Conclusion

Summary of Key Points

Collaborative prompt engineering has become an important approach for teams working with large language models. Tools like PromptLayer, LangSmith, and OpenAI Playground make it easier to manage prompts, track different versions, and experiment effectively, helping teams work together more efficiently [1][3]. For instance, LangSmith offers version control features that simplify tracking and comparing prompt updates [2][4].

Next Steps for Teams

To improve their prompt engineering processes, teams should focus on the strategies highlighted in this guide. Here’s how to get started:

- Use version control systems to monitor prompt changes and track performance metrics.

- Incorporate tools like Latitude.so to improve team collaboration.

- Continuously review and fine-tune prompts to improve their effectiveness.

As collaborative prompt engineering evolves, new tools and methods are constantly being introduced. The key to success lies in maintaining structured workflows while staying flexible enough to adapt to new advancements. By adopting these tools and practices, teams can improve their efficiency and achieve better results in their work with large language models.