10 Best Practices for Production-Grade LLM Prompt Engineering

Learn essential best practices for crafting effective prompts for large language models to enhance accuracy and scalability in production environments.

Crafting effective prompts for large language models (LLMs) in production settings is essential to ensure accuracy, efficiency, and scalability. Poorly designed prompts can lead to inconsistent outputs, errors, and wasted resources. Here’s a quick rundown of the 10 best practices to optimize your prompts:

- Provide Clear Context: Be specific about the task and include relevant details.

- Customize Prompts for Each Task: Tailor prompts to fit unique use cases.

- Break Tasks into Steps: Simplify complex workflows by dividing tasks.

- Define Output Specifications: Specify the format, tone, and structure you need.

- Validate and Preprocess Inputs: Ensure inputs are clean and standardized.

- Set Personas and Tone: Align the model’s tone with your audience and purpose.

- Use Version Control: Track changes and maintain consistency.

- Fine-Tune Model Parameters: Adjust settings like temperature for task-specific needs.

- Continuously Test and Improve: Regularly monitor and refine prompts.

Why It Matters:

Good prompt engineering ensures accurate, consistent, and efficient outputs, especially in high-demand production environments. Use tools like Latitude, LangChain, and PromptLayer to streamline workflows and integrate prompt optimization into CI/CD pipelines.

By following these practices, you can build reliable and scalable LLM-powered systems that perform well under production conditions.

Related video from YouTube

Best Practices for Production-Grade LLM Prompt Engineering

1. Provide Clear and Detailed Context

Set your LLM up for success by crafting prompts that are clear and context-rich. For example, instead of saying, "Write about AI in healthcare", try something like: "Write a 500-word article on AI's applications in clinical healthcare for professionals" [1].

2. Customize Prompts for Each Task

Avoid generic prompts. Tailor each one to fit the specific task by including relevant industry terms, formatting needs, and precise evaluation criteria. This approach ensures the output aligns with your goals [1][2].

3. Break Tasks into Steps

For complex workflows, divide tasks into smaller, logical steps. Use sequential prompts to guide the model through processes like analyzing data, identifying patterns, and drawing conclusions. This method improves reasoning and keeps the output consistent [1].

4. Define Output Specifications

Clearly outline the desired response format, structure, tone, and length. Whether you need a response in plain text, JSON, or XML, providing these details ensures the output meets your requirements. Don’t forget to keep inputs structured and error-free to match.

5. Validate and Preprocess Inputs

Reliable outputs start with clean inputs. Use preprocessing to:

- Standardize text and formatting

- Remove inconsistencies

- Address potential security risks

- Ensure inputs meet length restrictions

These steps help maintain accuracy and reduce errors.

6. Set Personas and Tone

Specify the tone and persona that best fits your audience, whether it’s professional, conversational, or empathetic. Aligning these elements across workflows ensures consistency, especially when chaining prompts [1][2].

7. Use Version Control for Prompts

Track changes and maintain consistency by implementing version control. This allows you to roll back updates and monitor how prompts evolve over time.

8. Fine-Tune Model Parameters

Adjusting parameters like temperature and top_p can tailor the model’s behavior to your needs:

| Parameter | Low Value Use Case | High Value Use Case |

|---|---|---|

| Temperature | Factual responses, technical writing | Creative content, brainstorming |

| Top_p | Precise answers, data analysis | Open-ended responses, idea generation |

Once adjusted, test the parameters regularly to maintain performance.

9. Continuously Test and Improve Prompts

Prompt optimization is an ongoing process. Use these strategies to refine them:

- Run A/B tests regularly

- Monitor response quality metrics

- Collect user feedback

- Update prompts based on performance data [1][2]

Tools and Platforms for Prompt Engineering

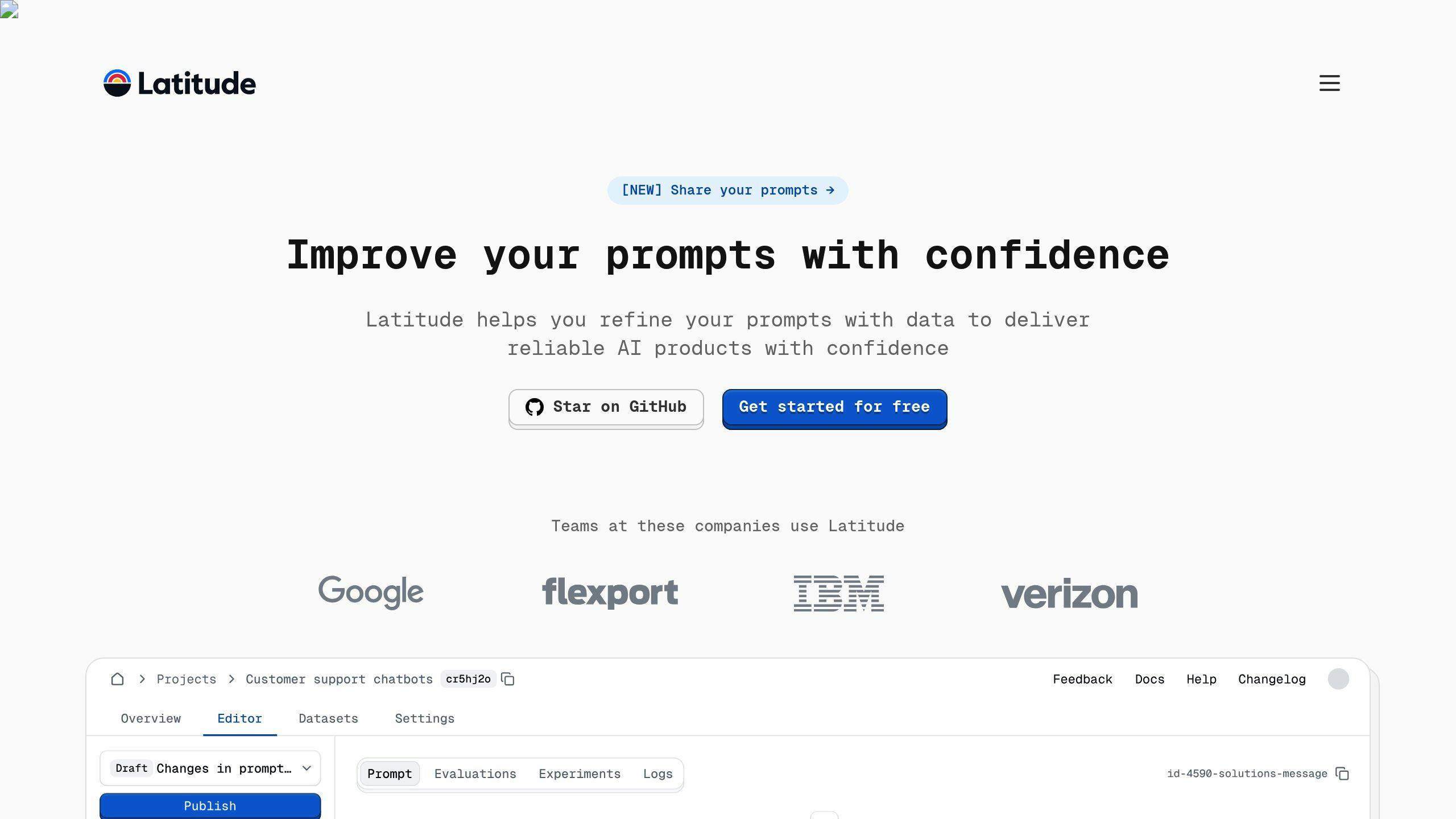

Latitude

Latitude is an open-source platform designed for teams to collaborate and refine prompts effectively. It combines version control with a community-driven environment, making it easier for both technical and non-technical teams to work together. Teams can track changes, ensure consistency, and integrate the platform into existing workflows.

Additional Tools for Prompt Engineering

Several other tools support prompt engineering tasks, including testing, scalability, and workflow management:

| Tool | Focus Area | Features |

|---|---|---|

| OpenAI Playground | Experimentation | Interactive testing, parameter tweaking, real-time feedback |

| LangChain | Workflow Management | Handles complex tasks, automates workflows, supports prompt chaining |

| PromptLayer | Deployment | Focuses on scalability, reliability monitoring, and user experience improvements |

These tools help address challenges like maintaining consistency, scaling workflows, and improving performance. When choosing a tool, look for features such as version control, collaboration capabilities, system integration, automated testing, and clear documentation.

"Prompt engineering is essential to elevating LLM performance, embodying a unique fusion of creative and technical expertise" [2]

Many of these tools can also be integrated into CI/CD pipelines, streamlining testing and deployment. This ensures that prompt engineering processes meet production requirements while remaining efficient and reliable.

Conclusion: Building Reliable Production-Grade LLM Prompts

Key Points

Creating production-grade prompts for large language models (LLMs) requires a structured approach that prioritizes reliability and scalability. Clear, specific, and well-organized prompts help ensure consistent, high-quality outputs. Regular testing is essential to maintain reliability in real-world settings. Version control plays a crucial role in tracking changes and maintaining consistency across updates. Integrating prompts into CI/CD pipelines supports steady, scalable performance in production environments.

With these core principles in place, the focus shifts to practical strategies for embedding prompt engineering into your workflows.

Next Steps

To improve your prompt engineering process, begin by reviewing your current prompts for clarity and precision. Tools like Latitude can assist with collaborative prompt development and version control, enabling both technical and non-technical team members to contribute effectively.

Here’s a suggested implementation plan:

| Phase | Focus Area | Key Activities |

|---|---|---|

| Assessment | Review prompts and find gaps | Document baseline performance |

| Implementation | Apply best practices | Set up version control and conduct testing |

| Optimization | Monitor and refine | Use feedback and data for improvements |

Engaging with professional communities and leveraging platforms for automated testing and deployment will help you stay informed and efficient. As LLMs continue to improve, ongoing adjustments will be key to maintaining strong performance in production.